Abstract

Models for low-latency, streaming applications could benefit from the knowledge capacity of larger models, but edge devices cannot run these models due to resource constraints. A possible solution is to transfer hints during inference from a large model running remotely to a small model running on-device. However, this incurs a communication delay that breaks real-time requirements and does not guarantee that both models will operate on the same data at the same time. We propose knowledge boosting, a novel technique that allows a large model to operate on time-delayed input during inference, while still boosting small model performance. Using a streaming neural network that processes 8 ms chunks, we evaluate different speech separation and enhancement tasks with communication delays of up to six chunks, or 48 ms. Our results show larger gains where the performance gap between the small and large models is wide, demonstrating a promising method for large-small model collaboration for low-latency applications.

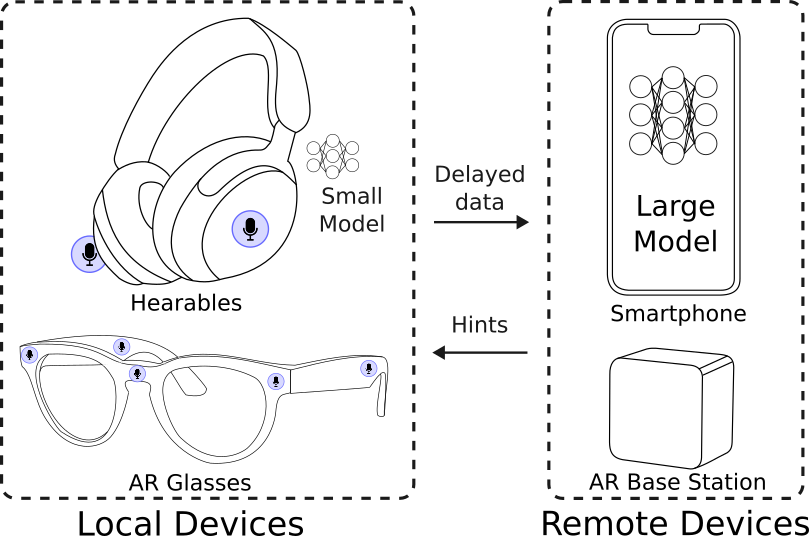

Target applications with knowledge boosting for augemented audio.

The knowledge boosting framework used for a source separation network.